Discover 9 actionable CI CD pipeline best practices to improve your software delivery. Learn to automate testing, secure your pipeline, and deploy faster.

In today's fast-paced world of software, a slow or unreliable deployment process isn't just a technical headache—it's a major business problem. If your competitor can ship features and fix bugs faster than you, you're already falling behind. This is where mastering your Continuous Integration and Continuous Deployment (CI/CD) pipeline becomes a game-changer. Think of your CI/CD pipeline as an automated assembly line that takes new code from a developer's computer and delivers it safely to your users. Simply having a pipeline isn't enough; you need to follow proven CI/CD pipeline best practices to make it fast, reliable, and secure.

This guide will walk you through nine essential best practices that successful product and development teams use to ship better software, faster. We'll go beyond the theory and provide practical, actionable advice with real-world examples. You'll learn how to catch bugs automatically, manage your servers with code, build security into your process from the start, and get instant feedback on your changes. By the end, you'll have a clear roadmap for turning your pipeline into a powerful tool that helps you innovate and deliver value to your customers with confidence.

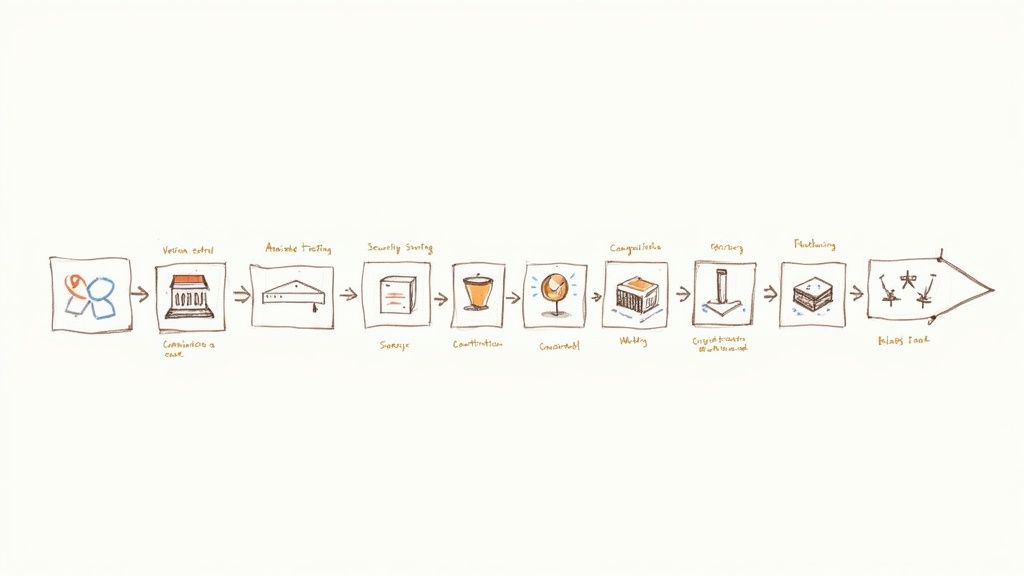

The foundation of any great CI/CD pipeline is having a single source of truth. This means everything needed to build, test, and run your application should be stored in a version control system like Git. This goes way beyond just your application's code.

"Everything as Code" means you also store your server configurations (using tools like Terraform), your pipeline definitions (the Jenkinsfile or .github/workflows/main.yml file), and even your database update scripts. When you do this, every change—whether to the app, the servers, or the pipeline itself—becomes a "commit." It's a trackable, reviewable, and reversible record of what happened, who did it, and why. This is a core tenet of modern CI/CD pipeline best practices, turning what was once a messy, manual process into a clear and predictable science.

Imagine a developer at a fintech startup makes a "small" manual change to a test server to fix a quick issue. The change isn't tracked. A week later, the QA team is pulling their hair out trying to reproduce a bug that only happens on that one server. They waste days because there's no record of the manual tweak. The root cause? The server configuration was no longer in sync with what was stored in Git.

By treating your Git repository as the ultimate source of truth, you eliminate the classic "it works on my machine" problem. You ensure that what's in your main branch is exactly what gets deployed, making your releases predictable and rollbacks simple and safe.

Getting started is about changing your team's mindset and taking a few practical steps:

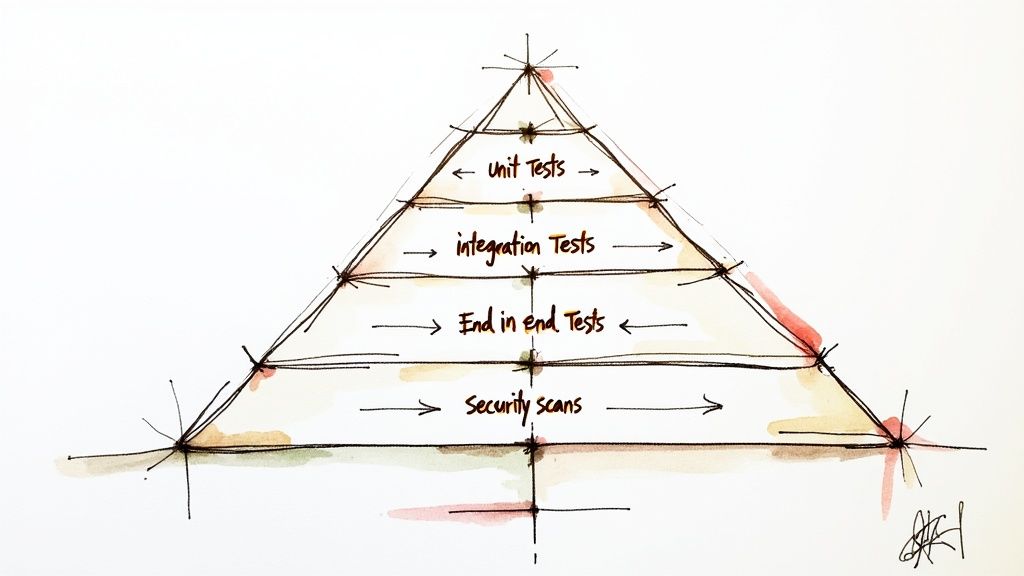

A CI/CD pipeline without automated testing is just a fast way to ship bugs to your users. The goal here is to build a safety net of automated tests directly into your pipeline. These tests act as "quality gates," checking your code at every stage, from small individual functions to the entire user experience. This is a non-negotiable part of modern CI/CD pipeline best practices, ensuring that speed doesn't come at the expense of quality.

This strategy involves more than just simple unit tests. A complete testing plan includes integration tests (to make sure different parts of your app work together), end-to-end (E2E) tests (to simulate a real user's journey), and security scans. By automating this "testing pyramid," your team gains confidence with every new piece of code, allowing you to deploy frequently without fear.

Picture a growing e-commerce startup. Their pipeline only runs basic unit tests. A developer pushes a change that passes all the tests, but it accidentally breaks the connection to the payment processor. The bug isn't caught until after it's live, and customers start complaining that they can't buy anything. The company loses revenue and customer trust. A simple, automated integration test would have caught this disaster before it ever reached production.

By building a safety net of automated tests, you shift quality control from a slow, manual process at the end of the cycle to a continuous, automated check. This gives developers instant feedback, reduces the burden on your QA team, and stops critical bugs from ever reaching your users.

A multi-level testing strategy involves running different tests at the right time in your pipeline:

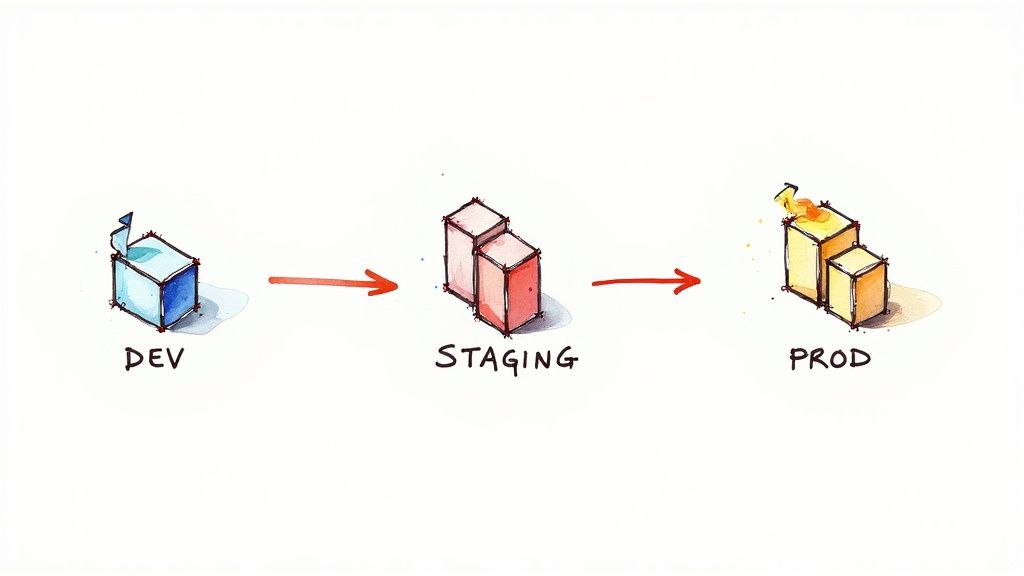

The "Build Once, Deploy Anywhere" principle is a simple but powerful idea that solves the problem of inconsistencies between different environments (like development, testing, and production). The rule is this: you create a single, unchangeable build package, called an artifact, at the very beginning of your pipeline. This artifact—which could be a Docker container, a .jar file, or a zipped folder—contains your code and all its dependencies. This exact same package is then promoted through every environment, from testing to staging to production, without ever being changed.

This ensures that the package you tested in your staging environment is the exact same one that runs in production. Environment-specific settings (like database connections) are injected when the app starts, not built into the artifact itself. This makes the entire process predictable and eliminates a whole class of frustrating, environment-specific bugs.

Let's say a developer's machine has a slightly different version of a system library than the production server. A build script runs fine locally but fails during the production deployment. Worse, it might create a hidden bug that only shows up under heavy traffic. The QA team thought they were approving a solid build, but they were actually testing something fundamentally different from what the customers would get.

By creating one unchangeable artifact, you guarantee that what you test is what you deploy. This gives you absolute confidence that your testing was meaningful and eliminates those painful "it worked in staging but broke in production" emergencies.

Adopting this practice means separating your build process from your deployment configuration:

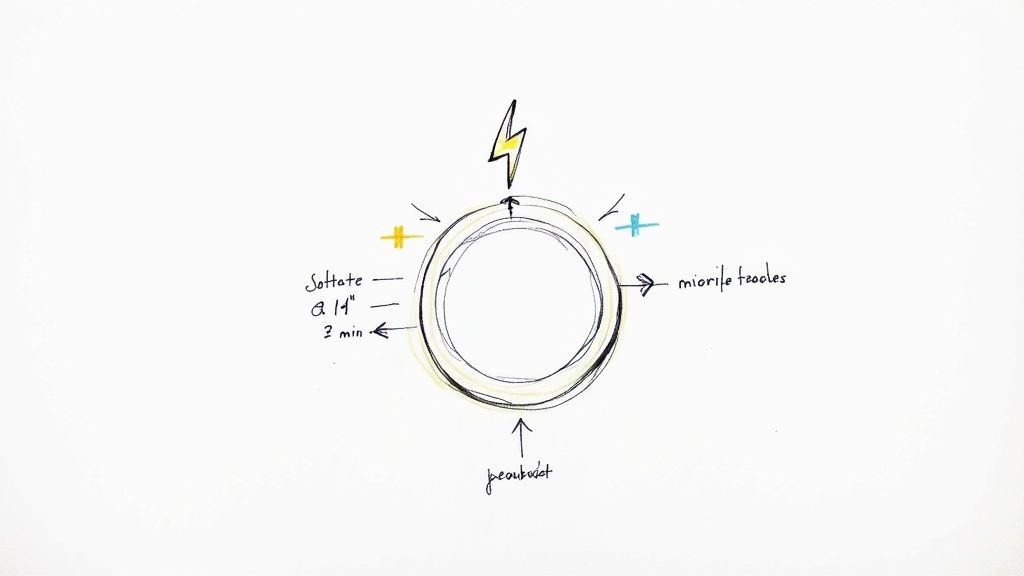

A slow CI/CD pipeline kills productivity. The core idea behind fast feedback loops is to design your pipeline to give developers clear and actionable feedback as quickly as possible after they commit a change. Instead of waiting an hour to learn that a simple typo broke a test, a developer should know within minutes. This allows them to fix the problem immediately while the code is still fresh in their mind, preventing context switching and keeping momentum high.

This principle is a hallmark of elite engineering teams at companies like Google and Shopify, who have invested heavily in making their pipelines incredibly fast. This is one of the most impactful CI/CD pipeline best practices for improving developer happiness and productivity.

Imagine a developer pushes a code change and immediately starts working on their next task. Forty-five minutes later, they get a Slack notification: "Build failed." Now they have to stop what they're doing, try to remember what they were working on an hour ago, figure out what went wrong, and push a fix. This stop-and-start cycle is a massive waste of time and a huge source of frustration.

A pipeline that gives feedback in under ten minutes empowers developers to experiment and iterate without fear. It turns the CI process from a slow, frustrating gatekeeper into a helpful assistant that speeds up the delivery of high-quality code.

Achieving fast feedback requires a strategic focus on optimizing your pipeline:

A CI/CD pipeline shouldn't just build your code; it should also manage the environment where that code runs. Infrastructure as Code (IaC) is the practice of managing your servers, databases, and networks using code, rather than clicking around in a web console. This extends the "single source of truth" principle to your entire technology stack.

By defining your infrastructure in files using tools like Terraform or AWS CloudFormation, you can version, review, and automate your environments just like your application. This eliminates "configuration drift"—the tiny, untracked differences between your development, staging, and production environments that cause so many headaches. For teams looking to scale, IaC turns infrastructure management from a manual, error-prone task into an automated, reliable process.

Imagine a popular e-commerce site goes down during a big sale. The operations team scrambles to manually set up a new server, but in the rush, they forget to apply a critical firewall rule. The new server can't connect to the database, making the outage even longer and costing the company thousands in lost sales. If their infrastructure was defined as code, they could have launched a new, perfectly configured server with a single command.

With Infrastructure as Code, you can create and replicate entire environments with total confidence. This makes things like disaster recovery, scaling for traffic spikes, and setting up new test environments incredibly simple and reliable.

Integrating IaC into your workflow means treating your infrastructure with the same care as your application code:

terraform plan to show what will happen. Once approved and merged, the pipeline runs terraform apply to make the change.The final goal of a CI/CD pipeline is to get code into production safely and without any manual steps. This requires fully automated deployments. An advanced and highly effective strategy for this is the blue-green deployment, which allows you to release new versions with zero downtime.

Here's how it works: you have two identical production environments, which we'll call "blue" (the current live version) and "green" (the new version). All user traffic is going to the blue environment. Your pipeline deploys the new version of your application to the green environment, which is not yet receiving any live traffic. Once the green environment is fully deployed and passes all health checks, you flip a switch at the load balancer, and all new user traffic is instantly routed to the green environment. The old blue environment is kept running for a short time, so if anything goes wrong, you can flip the switch back just as instantly.

Think about a team trying to push an urgent update during business hours. A traditional deployment fails halfway through, leaving the application in a broken state. The result is a customer-facing outage, a frantic scramble to manually roll back the changes, and lost revenue. With a blue-green strategy, this entire crisis is avoided.

By automating advanced deployment patterns like blue-green, you separate the act of deploying from the act of releasing. You can deploy new code to production at any time with confidence, knowing you can expose it to users with a simple, instant switch and roll it back just as easily if needed. This is one of the most valuable CI/CD pipeline best practices for mission-critical applications.

Integrating this level of automation requires careful setup:

/health) that the pipeline can check to confirm the new version is running correctly before switching traffic.Treating security as the final step before release is a recipe for disaster. It leads to last-minute delays and leaves you vulnerable to attacks. The modern approach, known as DevSecOps, is to "shift left" by building security checks directly into your CI/CD pipeline from the very beginning. This makes security a shared responsibility for the entire team, not just the job of a separate security department.

This practice turns security from a bottleneck into an automated, continuous process. By automatically scanning for vulnerabilities every time code is changed, you can find and fix problems when they are small and easy to deal with. Implementing this CI/CD pipeline best practice ensures your applications are built to be secure from the ground up. The essence of truly shifting security left within your CI/CD pipeline lies in adopting secure by design cybersecurity practices.

Imagine a SaaS company finds a major security hole just days before a big launch. The security team has no choice but to block the release, forcing developers to drop everything and work on a frantic, high-pressure fix. The launch is delayed, and customer trust is damaged. This whole crisis could have been prevented if an automated security scanner in the pipeline had flagged the vulnerable code the moment it was written.

By making security a core part of your pipeline, you give developers immediate feedback on potential issues. This allows them to learn and fix vulnerabilities when it's cheapest and easiest to do so, preventing security from ever becoming a last-minute emergency.

Integrating security involves adding different automated checks throughout your pipeline:

Your job isn't done when the code is deployed. You need to know how it's performing in the real world. This is where monitoring and observability come in. Monitoring tells you when something is wrong (e.g., "CPU usage is at 95%!"). Observability helps you understand why it's wrong by giving you deep insights into your system's behavior.

This proactive approach is a hallmark of mature CI/CD pipeline best practices. It helps you move from a reactive "firefighting" mode to a predictive, data-driven one. By collecting metrics (the numbers), logs (the event records), and traces (the story of a user request), you can spot problems before your users do.

Let's say a music streaming service deploys a new song recommendation feature. A subtle bug causes the new code to make way too many database calls. Without proper monitoring, this could go unnoticed for days, slowly degrading performance for everyone and driving up server costs. By the time users start complaining about the app being slow, the damage is already done.

Observability allows you to ask questions about your system that you didn't think of in advance. This is crucial for troubleshooting complex problems in modern applications, where the root cause is rarely obvious from a single error message.

Building observability into your process involves gathering three key types of data:

One of the most common and painful parts of a deployment is updating the database. Too often, application code is deployed automatically, but database changes are handled manually by a database administrator (DBA). This creates a huge bottleneck and a major risk of failure. A core CI/CD pipeline best practice is to manage your database with the same automated rigor as your application code.

This means automating and versioning every single database change. Tools like Flyway or Liquibase allow you to write database changes as simple, version-controlled scripts that are applied automatically by your pipeline during a deployment. This approach ensures that your database structure and your application code are always in sync, eliminating a huge source of human error.

Picture this: a new feature requires adding a new column to the users table. The application code is deployed automatically by the pipeline. But the DBA is on vacation, and the manual ticket to run the database script was forgotten. The new code immediately starts crashing because it's trying to access a database column that doesn't exist, causing a major outage for all users. This entire problem would have been prevented with automated database migrations.

By integrating database migrations directly into your CI/CD pipeline, you guarantee that your database schema always matches what your application code expects. This makes deployments safer, rollbacks more predictable, and coordination between developers and operations seamless.

Integrating database changes into your pipeline requires a systematic approach:

Building a world-class CI/CD pipeline is about creating a fast, reliable, and automated path from an idea to your users. We've covered the nine essential pillars that make this possible. From versioning everything to automating tests, from blue-green deployments to database migrations, each of these CI/CD pipeline best practices helps you build a powerful engine for delivering software.

This engine lets you ship better software, faster. However, the quality of what you ship ultimately depends on the code you put into that engine. A sophisticated pipeline will happily and efficiently deploy buggy, insecure, or messy code straight to production. This is where many teams hit a plateau. Their delivery is fast, but they're still spending too much time fixing bugs that should have been caught much earlier.

The ultimate "shift left" strategy isn't just about testing earlier in the pipeline—it's about ensuring code quality before the pipeline even starts. The manual code review process, while critical, is often a major bottleneck. It's slow, prone to human error, and takes your most experienced engineers away from solving tough problems.

Key Takeaway: A world-class CI/CD pipeline automates the delivery of code. The next step is to automate the quality assurance of that code before it ever gets to the pipeline.

This is the gap that AI-powered tools are now filling. By introducing intelligent automation at the pull request stage, you can give developers instant, expert-level feedback on potential bugs, security flaws, and performance issues. Imagine catching a subtle error or a security risk moments after the code is written, instead of days later during a manual review or—even worse—after it causes a problem in production. This proactive approach ensures your finely-tuned CI/CD pipeline is always working with clean, reliable, and secure code, amplifying the benefits of all the best practices we've discussed. It helps your team move from a reactive culture of fixing bugs to a proactive culture of preventing them.

Ready to ensure only the highest quality code enters your automated pipeline? Sopa uses AI to automate code reviews, catching bugs and vulnerabilities before they are ever merged. It acts as an expert pair programmer for your entire team, freeing up senior developers and dramatically reducing your bug count. Start your free Sopa trial today and see how AI can supercharge your CI/CD best practices.